链接:https://zhuanlan.zhihu.com/p/391910611

来源:知乎

著作权归作者所有。商业转载请联系作者获得授权,非商业转载请注明出处。

1、微博模拟登录比较麻烦,所以直接使用了cookie登录

2、微博的翻页是max_id,每次的max_id都是上一个接口翻页时返回的

只要你不是模拟登录,也就翻页的max_id需要注意下,话不多少直接上代码…

§ 封装一个.csv文件写入数据的函数:

·

·

·

·

·

·

·

·

·

·

·

·

·

·

·

def write_csv(merge_data, filename): filename = os.path.join(os.path.dirname( os.path.dirname(os.path.abspath(__file__))), ‘datas’, filename) with open(filename, mode=’w+’, newline=”, encoding=’utf-8′) as w: write = csv.writer(w, quotechar=’\t’, quoting=csv.QUOTE_ALL) write.writerow([‘评论时间’, ‘评论内容’]) for i in merge_data: data = [] for j in i: if isinstance(j, list): for g in j: data.append(g) else: data.append(j) write.writerow(data)

§ 配置文件存放headers头信息:

其实就是一个字典,自己先登录,然后cookie拿过来放进去…

§ 封装两个爬虫函数(主函数):

# -*-coding:utf-8 -*-# ** createDate: 2021/7/19 11:38# ** scriptFile: weibo_com.py“””注释信息:”””

import reimport timeimport requestsfrom beautifulExample import setting as spfrom beautifulExample.common.commonFunction import write_csv

def request_method(url, params=None): # 封装请求方法,并返回json数据 s = requests.Session() # 更新headers头信息,(头信息写到配置文件中了) s.headers.update(sp.DEFAULT_HEADERS) with s as r: return r.get(url=url, params=params).json()

def show_message(): “”” 返回id和uid :return: “”” params = { “id”: “Kpq3A6A1m” } url = “https://weibo.com/ajax/statuses/show?” response = request_method(url, params=params) return response[“id”], response[“user”][“id”]

def comments_data(cid, uid, max_id=None): “”” 获取评论 :return: “”” params = { “is_reload”: 1, “id”: cid, “is_show_bulletin”: 2, “is_mix”: 0, “count”: 20, “uid”: uid }

# 判断max_id是否为真,为真就请求带max_id参数的url,否则就请求不带max_id参数的url if max_id: url = f”https://weibo.com/ajax/statuses/buildComments?max_id={max_id}” else: url = f”https://weibo.com/ajax/statuses/buildComments”

# 返回max_id和评论数据 response = request_method(url, params=params) return response[‘max_id’], response[“data”]

if __name__ == ‘__main__’: save_data = []

# 请求获取cid和uid的函数 cid, uid = show_message()

# 定义max_id等于0 max_id = 0 for i in range(1, 21): # 循环20次

# 如果max_id等于0就不传max_id,否则就传max_id if max_id == 0: results = comments_data(cid, uid) max_id = results[0] # 返回max_id else: results = comments_data(cid, uid, max_id) max_id = results[0] # 返回max_id

# 循环评论列表 for j in results[1]: # 获取评论时间(Mon Jul 19 08:06:52 +0800 2021),并处理成正常格式 time_data = time.strftime(“%Y-%m-%d %H:%M:%S”, time.strptime(j.get(‘created_at’), “%a %b %d %H:%M:%S +0800 %Y”)) # 获取评论消息,并正则匹配去掉评论后面跟随的表情包 comments_text = re.sub(r’\[.*\]’, ”, j.get(‘text_raw’))

# 列表数据类型插入到列表中存储,主要是做成二维数据用于写入.csv文件中 save_data.append([time_data, comments_text])

# 写入文件中 write_csv(save_data, ‘weibo_comments.csv’)

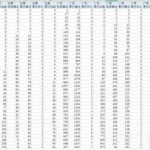

.csv文件显示写入结果:

excel文件显示结果:

有没有感觉这评论似曾相识的感觉

码云访问链接,可直接git克隆